I started to think about the topic a decade+ ago when first iPad was released, but it took me a while to adopt this practice in my toolchain.

Here I want to stop for a moment, gather everything I like and dislike about it,

and create a roadmap for even better way to do personal computing

(especially in the context of software developer workflow).

What is Remote Development anyway?

It means hosting your programming environment in the cloud instead of running on a laptop. The whole thing may sound a bit complicated, but have lots of clear benefits:

✅ Your server or a VM runs 100% of time. No need to wait for long tasks to complete, just close your laptop and go.

✅ Yes, you can work from everywhere! Move around easily without getting tied to some rig on the table.

✅ Very low requirements for your personal hardware. iPad finally makes sense as truly personal computing device.

✅ Use many operating systems simultaneously without your laptop burning a hole in your jeans.

✅ You now have many ways to better utilize modern cloud feature from Azure or any other provider of choice.

To name just a few.

My road to Remote Development

Two years ago my Mac mini began to slow me down. The project I was working on grew to the point when running it locally was no longer an option. I could have fire up the Windows VM for example, but there was no more room for other services I needed because the host system was already overheating.

So instead of buying new hardware I just spinned up a Linux VM with DigitalOcean and deployed half of the project there. The interop between parties was implemented using SSH tunneling.

With setup like that, I had to manage the codebase from two different places, and it was really annoying, but then I learned about the tool called...

Mutagen

Much to my regret, neither Krang nor Shredder is involved 🐢

Mutagen won't turn you into a 90s superhero, instead it will help you to set up seamless sync between your local code repository and remote server.

By doing so, you can manage your code with your favorite tools like Nova and Git Tower, and execute it on remote server.

Personally I think this is the most convenient approach for most people:

- No need to switch from your preferences.

- No need to maintain some way to manage your code. You can use a terminal editor or run a web version of VSCode (Theia, Coder), but do you want to?

- Using a couple of simple shell scripts you can easily migrate from one server to another in no time.

- You always have an option to continue working locally (on a plane or during some other network disruption).

As with any other cool instrument Mutagen is really simple to set up.

You need to set up SSH connection to the remote server. Here is an example section in .ssh/config file:

Either get the binary via Homebrew or by downloading it manually. Then cook up a configuration in <project_root>/.mutagen like this:

You need that to exclude certain files from being sync'd. For example, you probably don't need to copy node_modules from your server back to the local folder.

Mutagen operates around sync sessions between your local and remote folders. To create a session, run the following command:

Mutagen will create a session, print out its ID, then start syncing files between your local src folder and ~/project/src on remote server.

Advanced usage

To get back to the previous session:

And to forget the obsolete one:

It doesn't seem so easy anymore, right?

Shell scripts for the rescue! Create a bunch of variables in your .bashrc or in separate file. I used to keep mine in .remdev:

And some scripts to actually the Mutagen sessions. First one, called dev to start or resume the sync process. You can may it uses special file named .lastsync to keep the Session ID. Instead of using the string src to specify the code folder, you may want to use another variable relative to the current working directory.

And the second one called undev to gracefully terminate the session:

Having both scripts in your $PATH, hit dev or undev and see the magic 🧝♀️

The Last Resort

I've started using above approach, and while the coding experience was excellent, I immediately run into some restrictions.

How am I supposed to set up NAT on remote server, fire up three VMs, then inject Burp Suite proxy between them?

And althought it is totally doable to tunnel connections from the remote server to your local machine, I don't find it suitable for me because changes will be frequent and require a lot of manual intervention or tweaks in configuration.

So I decide to run a full-blown desktop on remote server.

Dramatis Personae

- Dedicated machine with 8 cores and 128GBs of RAM from HETZNER

- Windows 10 Pro license

- Some free time

You probably don't want to have a Windows VM sitting on a public interface. So the very first step is to prepare a Linux host, Ubuntu or Debian will do. The installation process is easy. Let me cover the steps after first boot.

- Change SSH server to only accept key-based authentication.

- Change your firewall to only accept connections on SSH port.

- Install KVM.

Here is an example of the script to set up iptables:

You can use ufw to make things simpler:

Setting up KVM roughly looks like this:

Get installation media from Microsoft website, and some additional drivers from Fedora Project (virtio-win.iso, virtio-win_amd64.vfd). Create the VM:

Connect to the VM through VNC. Use SSH tunnel to not expose the VNC port. Software like Jump Desktop will do that for you automatically. Scan for drivers on the floppy during installation.

Add yourself to Remote login user group, set up RDP and disable the VNC in VM's configuration. If you need to migrate to another server, dump your configuration and copy the disk image. Have all the goodness of Hyper-V, WSL etc.

It is the most versatile, but also most expensive way to take on remote development, in terms of both money and time. Getting the maximum of server resources is hard, you have to:

- Correctly adjust vCPU pinning according to your CPU's topology.

- Apply many small tweaks like Hugepages and separate I/O threads.

- Measure results and repeat.

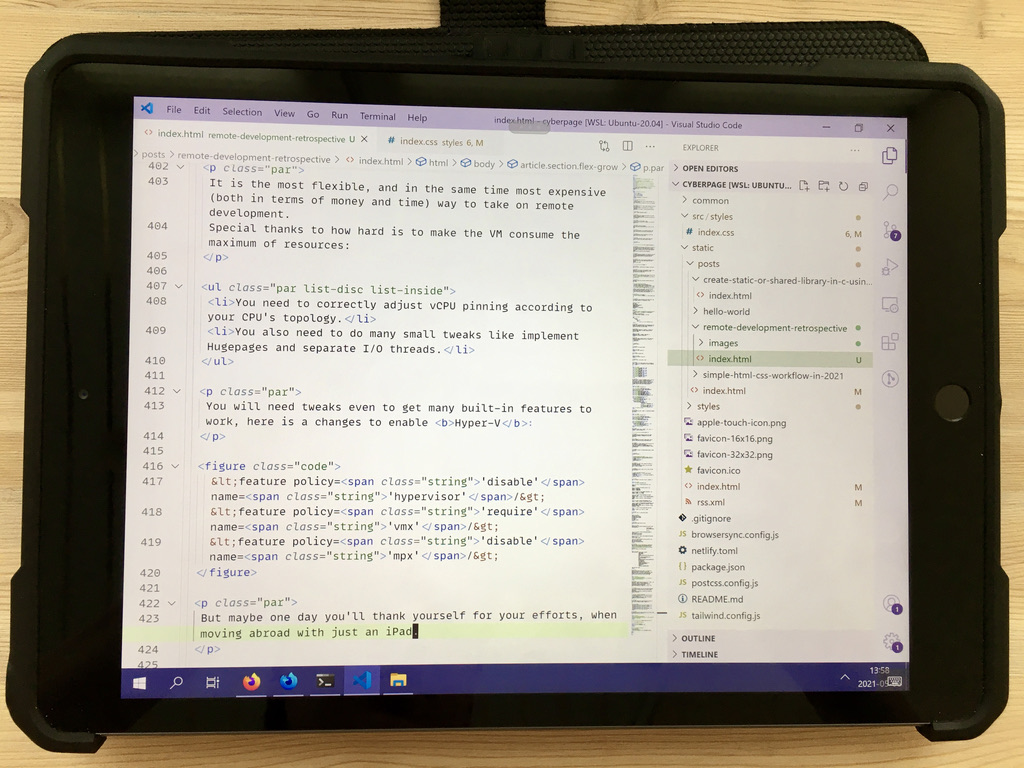

Many standard features needs configuration changes to work under the KVM, a Hyper-V for example:

More tweaks has to be made in VM to get the most from the server. Maybe one day your efforts will pay off. Maybe when you only need an iPad for travel?

Extra goodness

Thoughts about the Future

I run a setup like that for almost two years. Windows makes a perfect host for any programming activity. I cannot remember how noise from the fan sounds. Still, some hardware and software is needed to maintain access.

Imagine an app like 1Password. Single passphrase keeps all secrets. How about making a web gateway to my digital life?

My first step is to create an extension for Visual Studio Code so I can navigate my monorepo in a single window. Then to run it with Theia alongside my other favourite extensions like Tabnine.

The moment when I finish converting all the software I need to web applications, available through the single index page, will be truly liberating.